This is the third in a series of four articles based on my Jupyter Notebooks exploring quantum computing as a tool for generating random number distributions.

Generating random numbers from a variety of specific probability distributions shows us how the quantum state vector reflects the desired probability distribution, and the previous article showed how a variety of such distributions could be achieved. However, quantum computers can simulate a digital computer also. Even though bits are certain and qubits are uncertain, computing on a digital computer can be thought of like working with a special kind of probability distribution: one where there is a row on the state vector with a 100% probability, and all the rest are zero. This reflects how digital computers are deterministic.

Let’s look at how we might perform digital computing operations on a quantum computer, sticking with high-school level maths. First, we need to introduce some new operations.

X operation

We have already seen the CX, or “constrained swap”, operation. There is a simpler one called the X operation which does a swap within all pairs of rows in the state vector where the only difference is in a specific qubit. So, where the CX operation required specifying two qubits to determine the rows it affects, the X operation requires specifying just one qubit. Where you might think of CX as a “constrained swap”, you can think of X as just a “swap”.

To clarify the X operation, here is an example of how it might be used:

| Qubits | Initial state vector | X(0) | X(1) |

|---|---|---|---|

| |00> | 1.0 | 0.0 | 0.0 |

| |01> | 0.0 | 1.0 | 0.0 |

| |10> | 0.0 | 0.0 | 0.0 |

| |11> | 0.0 | 0.0 | 1.0 |

The first X swaps the first two rows, as these differ only in qubit 0 (the rightmost qubit), and while it also swaps the second two rows, these were the same, so we don’t see a difference there. The second X swaps rows |01> and |11>, as these differ only in qubit 1 (the leftmost qubit), and while it also swaps the remaining two rows, again these were the same value, so we don’t see any difference after the operation.

CCX operation

Now that we know about X and CX, you might be wondering if there are more constraints that can be added to X. Yes, a common operation is a “doubly constrained” version of X, sometimes known as a Toffoli operation.

The CCX operation is constrained to operate only on pairs of rows where two specified qubits are |1>, and it swaps pairs of rows where only a third qubit changes, i.e. a “doubly constrained swap” operation. Here’s what some CCX operations look like on a state vector consisting of three qubits:

| Qubits | Initial state vector | X(1) | CCX(0, 1, 2) | X(0) | CCX(0, 1, 2) |

|---|---|---|---|---|---|

| |000> (|0>) | 1.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| |001> (|1>) | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| |010> (|2>) | 0.0 | 1.0 | 1.0 | 0.0 | 0.0 |

| |011> (|3>) | 0.0 | 0.0 | 0.0 | 1.0 | 0.0 |

| |100> (|4>) | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| |101> (|5>) | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| |110> (|6>) | 0.0 | 0.0 | 0.0 | 0.0 | 0.0 |

| |111> (|7>) | 0.0 | 0.0 | 0.0 | 0.0 | 1.0 |

Since our examples use Qiskit, qubits are numbered from the right. Qubit 0 is the rightmost one, then qubit 1 is in the middle, and qubit 2 is the leftmost one. In the above table, as it is starting to get long, next to the qubits identifier for the row, I’ve also written the row number in brackets. The qubits identifier is a binary number, and corresponds to a decimal number, which is the row number, e.g. “011” is the binary number for 3, so I’ve written this as |011> (|3>).

In this example, the CCX(0, 1, 2) operation swaps rows where qubits 0 (rightmost) and 1 (middle) are |1>, i.e. those rows ending in |11>: rows |3> and |7>. The first time this operation is performed, both of those rows are 0.0, so it looks like nothing happens, but the second time, we see the effect of the swap performed.

Incrementing a 3-bit number

A very common operation on a digital computer is incrementing a number, or in other words, adding one to it. Incrementing 3 results in 4, incrementing 6 results in 7, and so on.

Each row of the state vector represents a different number, i.e. the decimal number corresponding to the binary number for that arrangement of qubits. For a state vector that represents 3 qubits, row |100> is row |4>, while row |110> is row |6>. Incrementing a number can be thought of as taking a state vector with a specific number encoded in it – the row with 100% probability – and turning it into a state vector with a new number encoded in it, specifically the original number plus one. For example, if we start with a state vector with row |4> with 100% probability, incrementing this would result in a new state vector with row |5> having 100% probability.

To implement this sort of algorithm, where a row has 100% probability, and we make another row 100% probability, we simply need to use variants of the X operation. The X, CX and CCX operations only swap rows around, so will always leave the state vector having a single row with 100% probability. In this case, they can simulate the deterministic operations of a digital computer.

To increment a number encoded on the state vector using variants of the X operation, it is quite straightforward, but we need to think about it in binary notation. If we add one to a number ending in |0>, it becomes |1>. While if we add one to a number ending in |1>, it will become |0> and carry a one to the next place. To achieve this, we can use X to swap from a row |0> to a |1> row, or visa versa, and a CX to manage the carrying of the one. Similarly, we can use a CCX to manage the carrying of the one to the final place.

Implementing in Qiskit

Let’s create this increment operation as a Python function. (You can grab the complete Python script from here, or just type in the code below.)

import numpy as np

from qiskit import QuantumCircuit, QuantumRegister, ClassicalRegister

from qiskit.quantum_info import Statevector

# Add the operations to an algorithm that increments the number

# encoded on a 3 qubit state vector

def add_increment(algo):

algo.ccx(0, 1, 2) # Carry the one to qubit 2, when qubits 0 and 1 are |11>

algo.cx(0, 1) # Carry the one to qubit 1, when qubit 0 is |1>

algo.x(0) # Add one to qubit 0Now we can test it out.

q = QuantumRegister(3) # We want 3 qubits

algo1 = QuantumCircuit(q) # Construct an algorithm on a quantum computer

# Start in the |2> row

algo1.x(1)

v1 = Statevector(algo1)

print(np.real_if_close(v1.data))$$\begin{bmatrix}

0.0 \\

0.0 \\

1.0 \\

0.0 \\

0.0 \\

0.0 \\

0.0 \\

0.0

\end{bmatrix}$$

# Increment the number encoded in the state vector

add_increment(algo1)

v2 = Statevector(algo1)

print(np.real_if_close(v2.data))$$\begin{bmatrix}

0.0 \\

0.0 \\

0.0 \\

1.0 \\

0.0 \\

0.0 \\

0.0 \\

0.0

\end{bmatrix}$$

# Increment the number once more

add_increment(algo1)

v3 = Statevector(algo1)

print(np.real_if_close(v3.data))$$\begin{bmatrix}

0.0 \\

0.0 \\

0.0 \\

0.0 \\

1.0 \\

0.0 \\

0.0 \\

0.0

\end{bmatrix}$$

We are successfully incrementing the number encoded in the state vector each time.

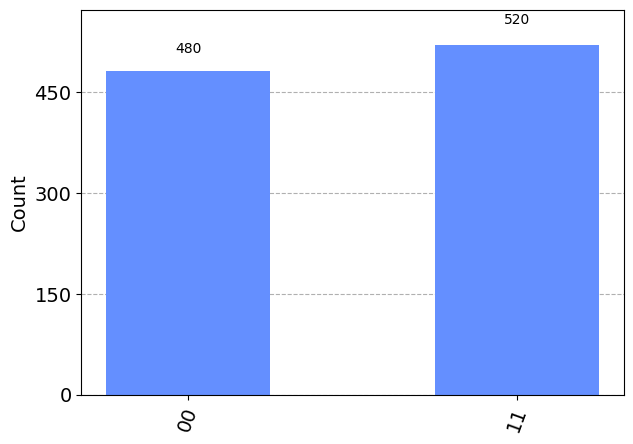

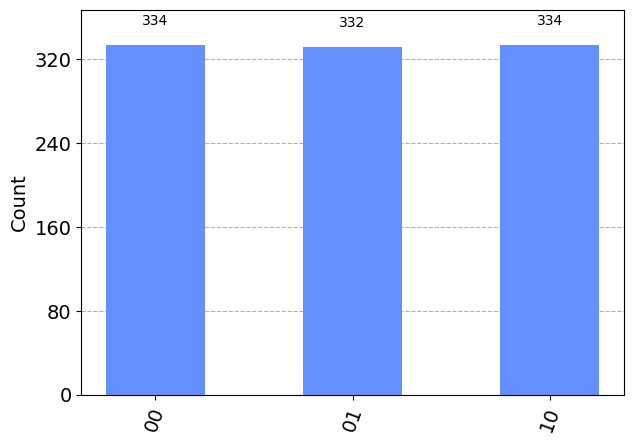

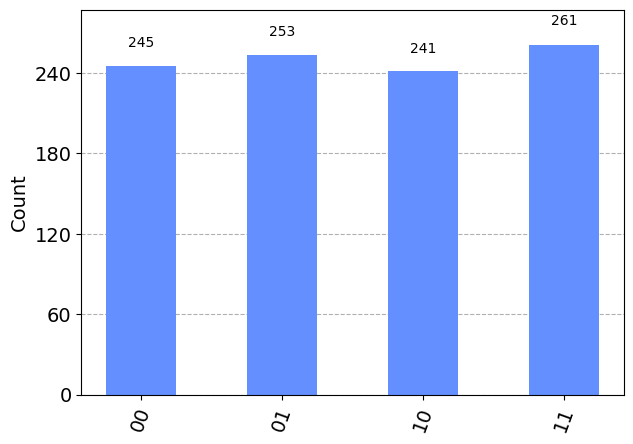

Doing multiple increments simultaneously

What if multiple numbers were encoded in the state vector? Actually, the same algorithm will continue to work.

Let’s start by encoding two numbers, so rather than one row having a 100% probability, the state vector will have two rows each with 1/√2 . Remember that we square this to get the probability, which will be 1/2 or 50%.

In Qiskit, we will encode both |0> and |3>, and apply the increment operation.

algo2 = QuantumCircuit(q) # Construct an algorithm on a quantum computer

# Start with |0> and |3> rows having equal probability

algo2.h(2)

v4 = Statevector(algo2)

print(np.real_if_close(v4.data))$$\begin{bmatrix}

\frac{1}{\sqrt{2}} \\

0.0 \\

0.0 \\

0.0 \\

\frac{1}{\sqrt{2}} \\

0.0 \\

0.0 \\

0.0

\end{bmatrix}$$

# Increment the numbers encoded in the state vector

add_increment(algo2)

v5 = Statevector(algo2)

print(np.real_if_close(v5.data))$$\begin{bmatrix}

0.0 \\

\frac{1}{\sqrt{2}} \\

0.0 \\

0.0 \\

0.0 \\

\frac{1}{\sqrt{2}} \\

0.0 \\

0.0

\end{bmatrix}$$

Now it has rows |1> and |4> with equal probability. Two increments have been performed simultaneously, without changing the increment operation at all. (In fact, the increment operation can also be thought of as a rotation operation, where the values are rotated through all of the rows of state vector, a row at a time.)

It is this sort of capability that highlights the power of quantum computers to rapidly speed up some types of computation.

In conclusion

We have added another two more operations to our set, and seen how to use them on a quantum computer to perform a traditional digital computer functions (incrementing a number). We’ve also seen how quantum computers can enhance digital functions, like performing multiple increments at once. Here is the set of operations we’ve talked about so far:

| Operation | Short-hand description | Specified by | Detailed description |

|---|---|---|---|

| H | “half” | 1 qubit | For all pairs of rows that differ only by the value of a specific qubit in the outcome, replace the first row value with a new value that is the sum of the original values divided by √2, and the second row value with the difference between the original values divided by √2. |

| CX | “constrained swap” | 2 qubits | For all pairs of rows where the first qubit specified is in the |1> state in the outcome, and where otherwise the rows differ only by the value of the second qubit specified, swap the rows in the pair. |

| RY | “relative swap” | 1 angle and 1 qubit | For all pairs of rows that differ only by the value of a specific qubit in the outcome, swap a fraction “f” of the value from the first row to the second, and bring the opposite fraction (i.e. 1-f) from the second row but with the sign flipped, where “f” is specified as the angle 2 x arcsin(√f). If “f” is 1.0, the angle will be 𝜋. |

| X | “swap” | 1 qubit | For all pairs of rows that differ only by the value of a specific qubit in the outcome, swap the values in the pair. |

| CCX | “doubly constrained swap” | 3 qubits | For all pairs of rows where both the first and second qubit specified are in the |1⟩ state in the outcome, and where otherwise the rows differ only by the value of the third qubit specific, swap the rows in the pair |

The next article will look at a well-known algorithm that performs a task that is complex on a digital computer but is very efficient on a quantum computer.